Restoring SSH access using the web console in VMware ESXi

Disclaimer

Lets start this post pointing out that this is an incredibly hacky (read: bad)

way to recover the (mostly default?) sshd_config file on an ESXi

installation. It turns out when I first made the change, I did not test that it

actually persisted after reboot and didn't find out until after I installed

an update, 262 days after the last configuration change. Oops. I definitely

don't recommend doing this unless you have no other choice and don't have

support from VMware.

As with everything you read online, I provide no support, warranty, or any other claims if you follow these steps, and am not responsible if you mess up your installation!

Background

With all that out of the way, let me set the stage. My ESXi instance is hosted in a remote co-located center, without a remote IP KVM (since I am too cheap to pay for one). Not exposed to the internet, I need to VPN in to get access to the web console (HTTPS) or SSH. This has served me well for the last half year, and even with the VPN requirement, I also edited the SSHD configuration file to require private key access and disable the root user from logging in via SSH (security in depth).

The update

The server had an uptime of 262 days since the last reboot and I decided to apply the latest ESXi update. After the update, I could log in via the web console, but SSH access was denied. Even though it seems conveniently timed, the update did not cause any of these problems. This would have occurred if the server rebooted for any other reason.

I originally followed

this VMware KB article on

how to secure SSH. I diligently copied my SSH keys in to

/etc/ssh/keys-<username>/authorized_keys as described. What they fail to

mention is that the keys do not persist after a reboot. The changes to

sshd_config do persist, however, which is why I was left with keys that

could log in to root, but PermitRootLogin no was still set. I had lost SSH

access to my instance.

Confirming SSH Keys

During the troubleshooting process, I came across some neat ways to verify and

set the SSH keys for the root account. I didn't see much in the way on how

to do it. The endpoint to use is hosted at the same site and port of your web

console, so if you access your console via https://server/ui you can access

files via the /host path: https://server/host.

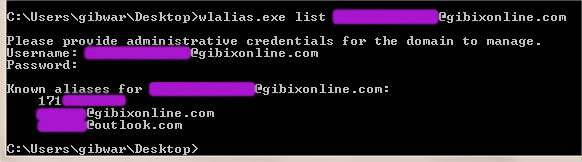

From what I have been able to gather, accessing this endpoint to upload files

requires both Basic authorization as well as sending a vmware_client=VMware

cookie when sending the PUT request.

Checking the current root SSH keys

I used PowerShell Core to run the following commands, but this would work in regular Windows PowerShell as well as can be adjusted for any other client of choice (curl, etc).

Updating the root SSH keys

Using the output of $r.Content above, I took the current content and added

my new SSH key (because at the time I was thinking it was a key issue, not a

configuration issue) and uploaded the keys to the server, this was also done in

PowerShell.

% $keys = "$($r.Content)`n$(Get-Content ~/.ssh/esxi.pub)`n" % $keys ssh-rsa AAAA... ecdsa-sha2-nistp256 AAAA... % Invoke-WebRequest -Credential $creds -Authentication Basic ` >> -Uri "https://server/host/ssh_root_authorized_keys" -SkipCertificateCheck >> -Method PUT -Headers @{Cookie="vmware_client=VMware"} -Body $keys StatusCode : 200 StatusDescription : OK ...

Repeating the code above for checking the keys confirmed it added the new key as expected.

Attempting to create a custom VIB

After getting a new SSH key on the server and confirming that it doesn't work

for my admin user or root user, I finally had the wherewithal to check the logs

on the web console, and finding that root login wasn't even being attempted

because root access was deined. That means my sshd_config file was still

intact after the update, but the keys for my admin user were missing and root

was blocked from logging in. How can I go about getting access? It turns out a

custom VIB was the solution! Or so I

thought. While I ran in to several posts on the subject, nothing really worked

to the extent I was hoping for. NOTE: this is where having physical access

would have allowed me to fix the file and be on my merry way. If you have

physical access, USE IT!

First issue is that without VMware's blessing, you cannot create a "community"

bundle file that has permissions to the core system (/etc, /usr stuff)

but could write to /opt or

create firewall rules.

Unfortunately, I needed to fix the sshd_config file, so a community version

was not going to work, and let me tell you, I tried. I went through the process

of grabbing VMware's VIB builder program, getting SuSE Enterprise Linux 11 SP2

to run (see firewall link above for directions, don't go higher, it won't

install the tool!), building and deploying my file. I even came across a way

to use "partner" files

without VMware's signature, however that only works in a setup with vSphere. I

found I could even force the install of my community VIB

... if I had SSH access.

Nothing I found would work in my scenario, though curiously every search I had that involved "ssh" and "vib" kept returning results for some VMware community Department of Defense thing, which I kept ignoring, because why would I need some DOD thing? Well, as I was about to order an IP KVM, I decided to check out the link.

Finding the "right" VIB

Having had a crash course in how VIBs work and their terminology I was able to read through and understand the instructions on what the VIB actually did, which I figured would be quite complex. All it does is edit 4 files in your system:

/etc/issue: adds a super scary login banner warning about accessing United States Government infrastructure

/etc/pam.d/passwd: updates password complexity requirements

/etc/ssh/sshd_config: "adds necessary daemon settings"

/etc/vmware/welcome: adds the same super scary warning to ESXi's direct console screen (physical access).

Reading deeper, the DoD VIB also comes in two flavors: enable root SSH access

and deny root SSH access. Bingo! The changes in the VIB were safe enough that

it wouldn't impact the system and it will set PermitRootLogin to yes! I

quickly downloaded the zip file and promptly used the

dod-stigvib-67-re-1.0.0.1.14639231.vib file. Et voilà, I had SSH access to

my system again through the root account! Not wanting root access, I promptly

disabled it again after setting my admin user's keys again. Or so I was hoping.

It turns out that when you have files managed by a VIB, you cannot edit the

files, even as the root user. I uninstalled the DoD VIB and crossed my fingers.

I still had access!

Using a shell script to set SSH keys on reboot

With access restored for the root user and the DoD customizations removed I had full access back to the configuration files I needed. I still want to disable root access to SSH and somehow persist my esxi-admin's keys after a reboot. How to pull this off?

It turns out that I had actually found the answer in my previous VIB author searches.

While the directions are listed for ESXi 4.x/5.x, they also work fine on 6.x as

well. Custom files, such as /etc/ssh/keys-<username> are not backed up by

the system, and you cannot force it by creating .#files or other means, it

turns out you can edit /etc/rc.local/local.sh and have it backed up and

persisted across reboots! Once I found these nuggets I found people copying SSH

keys to the persisted volume mounts and copying it to a local ~/.ssh file

for the user. Since I have a single user and my root account is effectively

disabled, I opted for a simpler approach of copying the SSH keys from the root

user to my user on boot.

mkdir /etc/ssh/keys-esxi-admin cp /etc/ssh/keys-root/authorized_keys /etc/ssh/keys-esxi-admin chown esxi-admin:esxi-admin /etc/ssh/keys-esxi-admin/authorized_keys

So there you have it, a way to recover SSH access due to a modified

sshd_config file without needing physical access (but needing web console

access). If you have physical access, please use it instead of this long thread

of bad ideas. As stated at the beginning, I am not responsible if you use this

and completely break your installation. No warranty, support, etc is implied.